The Crowdsourcing Process: Decisions about Tasks, Expertise, Communities and Platforms

July 17, 2013, 08:30 | Long Paper, Embassy Regents D

Introduction

Business, governments, community groups and academic projects are turning to crowdsourcing, an internet-based process, to facilitate access outside expertise needed to complete various tasks (Brabham, 2008; Howe, 2006). This mechanism holds great potential for academic projects, particular for those involving large amounts of data that needs to be identified, transcribed, analyzed and/or catalogued. It can often supplement meager project budgets by sourcing the work at relatively low cost (Corney et al., 2009; Holley, 2010). Further, crowdsourcing supports growing calls for public engagement in research projects (SSHRC, 2012). Several academic projects are at the forefront of this trend with public involvement in data classification (Galaxy Zoo, 2010), error correction in texts (Holley, 2009), text transcription and/or annotation (Transcribe Bentham, 2012; Zou, 2011; Pynchonwiki, nd; Siemens, 2012), cataloging and metadata and database creation (The Bodleian Library, 2012; Picture Australia, nd), and many others.

As crowdsourcing is introduced into more activities, it becomes important to understand “how to manage the crowd in a networked society” to ensure that a project achieves its objectives (DISH, 2011). Initial studies in participation motivation have found that individuals are interested in participating in a larger cause, using their skills, earning recognition and/or being part of a community, and potentially money (Wexler, 2011; Organisciak, 2010; Raddick et al., 2010; Brabham, 2008, 2010). Other research has focused on the most appropriate ways to solicit and encourage participation among a potential community of contributors by making the activity in question fun, presenting a big challenge to be undertaken, and reporting on progress regularly (Digital Fishers, nd; Holley, 2012). However, most of the resaerch has been conducted within the private sector context and on short-term projects with little need to manage volunteers over a longer period of time (Wexler, 2011), which may limit the applicability of results to academic projects.

While the use of crowdsourcing is increasing, little work has been done to understand ways to organize the work to ensure that this crowd’s contribution is delivered within an academic’s project’s schedule, budget and other resources and to the required quality standard (Geiger et al., 2011; Organisciak, 2011). In particular, projects need to understand the most appropriate ways to organize work flows, technical infrastrucure, and staffing requirements to manage volunteers and confirm quality (Zou, 2011). Opportunity exists to build from reflections of several projects to understand these issues (DISH, 2011; Holley, 2012; Zou, 2011; Corney et al., 2009; Holley, 2009).

This paper will contribute to this discussion by reviewing the literature to suggest a crowdsourcing process framework explore the range of decisions that must be addressed in advance to ensure quality and successful project outcomes. In addition, it will report on interviews with several crowdsourcing projects with regards to their workflow organization. The paper will conclude with recommendations for projects contemplating this tool as a way to reach project outcomes with limited project funds.

Literature Review

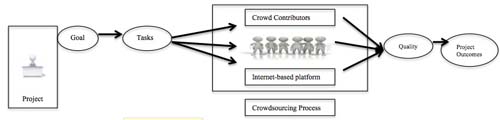

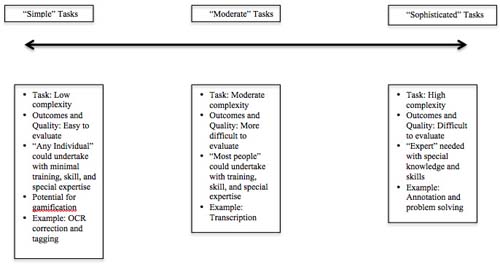

While no common definition exists for crowdsourcing (Holley, 2010), every crowdsourcing project shares several components. As seen in Figure 1, there must be an organization, a particular task to be completed to meet project goals and outcomes at a specified quality level, and a community, comprised of both experts and novices, which is willing to do the work for little or no money. These interactions are facilitated through an internet-based platform (Brabham, 2012; Schenk et al., 2011; Geiger et al., 2011; Tong et al., 2012). The interested organization must make a series of decisions regarding the type of expertise, qualification and/or knowledge required, the presence of a contributors, the mechanisms by which they will participate and contribute, project remuneration, motivators to keep participants engaged, and quality control mechanisms (Geiger et al., 2011; Corney et al., 2009; Rouse, 2010; Organisciak, 2011). The range of tasks and required expertise can be seen along the continuum in Figure 2.

Figure 1: Crowdsourcing Approach

(Adapted from Geiger et al., 2011; Corney et al., 2009; Rouse, 2010)

Figure 2: Crowdsourcing Task Continuum

(Adapted from Corney et al., 2009; Rouse, 2010)

Ultimately, projects then select the appropriate interface to both solicit and receive contributions from the public in ways that keep the “crowd” motivated and participating (Tong et al., 2012; Organisciak, 2010, 2011).

Methods

This project uses a qualitative research approach with in-depth interviews with members of crowdsourcing projects. The interview questions focus on the participants’ use of the “crowd” to achieve tasks, ways to organize workflows, type of infrastructure in place to support the work, and challenges (Marshall et al., 1999; McCracken, 1988).

At the time of writing this proposal, final data analysis is being completed. The results will inform decision making needed to be made by projects to effectively and successfully use crowdsourcing.

This research will make several contributions to the knowledge base about ways to incorporate the public into academic projects. First, it builds on work already undertaken to community and engage the public in academic research through crowdsourcing (Causer et al., 2012; Holley, 2009; Organisciak, 2010). Second, it builds on earlier studies on participants’ motivation to participate in these projects with an exploration of the organization of the tasks, process, and other components of the crowdsourcing process from the perspective of the project itself (Brabham, 2010; Organisciak, 2010; Wexler, 2011). Finally, it extends understanding about the nature of academic collaboration as projects expand relationships beyond the team to the public (Siemens, 2009).