Eighteenth- and Twenty-First-Century Genres of Topical Knowledge

July 17, 2013, 10:30 | Short Paper, Embassy Regents E

Our paper examines the similarities and differences between two technologies for representing topical information from different historical periods. Taking the 1784 index to Adam Smith’s seminal theory of political economy, An Inquiry into the Nature and Causes of the Wealth of Nations (1776), as a case study, we investigate the contrasting logics and aesthetics of knowledge organization apparent in the lateeighteenthcentury index and the twentyfirstcentury topic modeling algorithm known as latent dirichlet allocation. To accomplish this, we have collaborated to create a tool we call the Networked Corpus. [1] Inspired by the way readers mark passages that share a common theme, trope, or other feature, the Networked Corpus provides a computational way to navigate a corpus based on a topic model, while also facilitating comparison between this mechanical model and the humancreated index of Smith’s text. Our presentation will suggest a historical lineage between modern topic modeling and the eighteenthcentury concepts of topic (which developed from the rhetorical idea of “topos”) and system (which emerged in the allencompassing moral philosophy of the period) that are both exhibited in the index. We argue that the intentionally anachronistic comparison of topic modeling with the eighteenthcentury index reveals similarities and differences between what each approach counts as a salient feature of a text for the purpose of organizing and representing its topical, informational, or conceptual content.

Topic modeling is a family of statistical methods that attempt to find “latent” semantic content in texts, under the assumption that texts exhibit mixtures of “topics” that have characteristic vocabularies. [2] Topic modeling software attempts to find the topic definitions that best fit a given set of texts, while inferring which texts exhibit which “topics” based on the words that they use. This method was originally proposed as an information retrieval tool, with the goal of enabling people to search for broad themes that cannot necessarily be identified with particular words. As such, it competes with the subject index; but it differs from traditional indexing both in its assumptions and in the form the output takes. Preparing a subject index generally involves imagining what a reader might want to find — “think of the user” is the “motto” given in G. Norman Knight’s classic indexing textbook — and results in a product that suits those who can describe what they are looking for. [3] Topic modeling, by contrast, is based on a generative model —an abstract description of the process through which texts were produced — and constructs “topics” that do not necessarily correspond to anything that can be easily described. Using the output of a topic model as an index requires that the topics be labeled, something that requires an interpretive judgment that is often very difficult to make, and that can often, as scholars using topic modeling have argued, be misleading. [4]

The first stage of our project was an attempt to create an information retrieval program that better suits this limitation of topic modeling than forms that are tailored for users who already know what they are looking for, such as the index or the search engine. We wrote a Python script that takes in a collection of texts and the output of the topic modeling program MALLET, and produces an HTML version of the corpus with interactive navigation features. [5] In addition to an index of the “topics” in the model, the output includes asterisks in the margin next to passages where there is a particularly high concentration of a given topic relative to the concentration in the text as a whole — “exemplary” passages, as we are calling them. Clicking on an asterisk summons a popup box that contains links to other “exemplary” passages for the same topic. This construct is intended to enable navigation not from a “topic” to a passage, as in an index, but from one passage to another. It encourages the user to read until they come across something interesting that has been marked with an asterisk, and then see where the links go. The networklike structure of this tool gives the topic model an exploratory function that does not depend on any prior knowledge of what topics there are, or of what, if any, significance the topics have.

The Networked Corpus also includes features that are intended to make topic models easier to interpret. The user has the option to “explain the relevance” of a topic, showing a box listing the words most strongly associated with that topic, the “exemplary” passages that the program found for that topic, and other texts in the corpus that also contain a high concentration of that topic. The “explain” feature also highlights all of the words in the text that arose from the selected topic according to the model, and shows the density of the topic over the course of the text as a sideways line graph that runs in the margin. This visualization gives an idea of which parts of a text contribute to its association with a given topic and which do not, providing a rich body of both positive and negative evidence by which the topic model can be interpreted.

In the second stage of our project, we are investigating how the “topics” of a topic model differ from the notion of topic or subject that was employed in the lateeighteenthcentury index. Recently literary and intellectual historians including Ann M. Blair and Leah Price have described the historical development of practices of indexing, commonplacing, and generally what we might call “topical” knowledge in the seventeenth and eighteenth centuries. [6] As a lecturer in rhetoric and belles lettres and later a professor of moral philosophy, Adam Smith participated in defining the significance of those activities for the emergence of modern disciplines such as literature, history, and political economy. Upon the publication of the first edition of The Wealth of Nations without an index, Smith’s friend and fellow university professor, Hugh Blair, encouraged him to add an index and a syllabus like the ones they used “to give in [their] college lectures” because those additions would offer “Exhibit a Scientifical View of the Whole System." [7] For Blair, like Smith, representing the knowledge a text contained in various comprehensible and manageable forms was a critial aspect of the production of new knowledge.

Our paper considers whether new epistemological units produced by digital methods may facilitate comparative examination of similar ones from earlier periods. More specifically, we track the development of topical knowledge in the eighteenth century by comparing indices and commonplace books of the period to algorithmically produced “topics.” During the last year we have collaborated to create a tool we call the Networked Corpus. Inspired by the way readers mark passages that share a common theme, trope, or other feature, the Networked Corpus provides a computational way to connect topics across the entire range of a corpus. We are currently working on a project that compares the historical changes to the content and form of eighteenth-century indices and commonplace books and topic modeling output from the corpus.

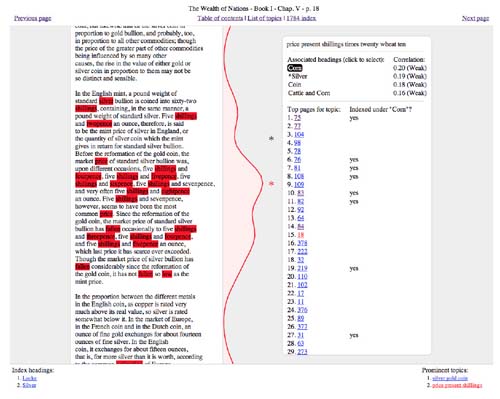

This “scientifical” approach to indexing suggests a particular model of the text, in the sense that Willard McCarty uses the word “model” — a “fictional or idealized representation” that one can see the text through. [8] The models actually employed in the construction of Scottish Enlightenment texts cannot, of course, be directly observed, but as a way of investigating one of these models speculatively, we produced a special version of the Networked Corpus for The Wealth of Nations that presents the highly detailed index that appeared in the 1784 edition of the book alongside a topic model generated from the text (Figure 1). To facilitate comparison of these two constructs at a conceptual level, we transformed both the index and the topic model into something similar to marginal annotations, showing all of the index headings that reference a page on the screen when the page is displayed, along with a list of prominent topics on the page. We also used the Spearman rank correlation to find topics that tend to strongly match the pages referenced under particular index headings, and indicated the pages on which these correlations break down. Based on a reading of these points of disagreement, we contend that the topic model is able to pick up on rhetorical moves in the text that are not represented within the sort of system of concepts that the index constructs, at the cost of never being able to claim the sort of exhaustiveness that the Scottish Enlightenment writers sought.

In this presentation, we suggest that the approach we have taken in our study of The Wealth of Nations — comparing constructs from different time periods that address a problem in radically different ways — opens up a new avenue for examining both contemporary text mining models and the models that are implicit in the organization of historical texts. As McCarty has observed, modeling supports an “orientation to questioning rather than to answers, and opening up rather than glossing over the inevitable discrepancies between representation and reality.” [9] Our deformation of The Wealth of Nations employs a statistical model not as a way of studying the text itself, but as a vantage point from which we can examine the assumptions and blind spots of another, historical model of textual organization, turning this discrepancy into something of hermeneutic use. We thus agree with Alan Liu, who encourages scholars to pursue “any mediation that produces a sense of anachronism (residual or emergent, in Raymond Williams’s vocabulary) able to make us see history as a compound relation of proximity and distance between past and present.” [10] With the Networked Corpus, we suggest a way of doing this that converts the alienness of mechanical methods of reading in comparison to older models into a productive source of tension.

Figure 1:

Screen shot from the Networked Corpus